ChatGPT and AlphaGo: Exploring Major Breakthroughs in AI Development

The idea of machines that can think like humans—or even outperform human intellect—has fascinated people for centuries. Science fiction authors, visionary inventors, and bold thinkers imagined artificial intelligence (AI) long before it became part of our daily lives. Today, AI appears to be everywhere, from virtual assistants answering our questions to AI-generated artworks portraying whimsical pandas.

But how did humanity’s abstract ideas about intelligent machines turn into the powerful AI technologies we know today? Interestingly, the ancient board game Go played an unexpected yet crucial role in the evolution of AI.

This article is adapted from a video on our YouTube channel, Go Magic, which explores the fascinating history of AI through the lens of two landmark technologies: ChatGPT and AlphaGo.

Watch the original video on our channel Go Magic here: ChatGPT & AlphaGo: The Key Milestones of AI History.

Let’s dive into the remarkable journey of AI—from early imagination to groundbreaking innovation.

Imagining Intelligent Machines: From Fiction to Reality

The concept of machines capable of thinking like humans—or perhaps even surpassing our intelligence—emerged long before practical artificial intelligence became a technological reality. Initially, these ambitious ideas were mostly confined to the imaginative minds of science fiction writers, visionary philosophers, and innovative dreamers brave enough to envision a future filled with thinking machines. Today, AI surrounds us, manifesting as virtual assistants answering our queries or algorithms generating creative images and artworks.

Early Visions: Leonardo da Vinci’s Robots

Remarkably, the earliest ideas of mechanical beings resembling modern robots appeared as early as the 15th century. Leonardo da Vinci, the celebrated inventor and artist, drafted designs for mechanical devices that imitated human movements. These creations laid foundational concepts for robotics, planting early seeds in humanity’s imagination that one day machines might perform tasks and perhaps even think independently.

Robots Enter the Cultural Imagination: Karel Čapek and His Play

However, the very word robot itself didn’t exist until centuries later. It was in 1920 that Czech playwright Karel Čapek introduced the term robot in his play “Rossum’s Universal Robots.” Čapek crafted a narrative in which artificial beings, designed to handle manual labor, gradually developed self-awareness and ultimately rebelled against their human creators.

Intriguingly, Čapek initially considered naming his fictional creations “labori,” drawing from the Latin root for labor, reflecting their intended function as artificial workers. Nevertheless, he ultimately opted for the Czech term “robota,” signifying forced or compulsory labor—perfectly capturing the essence of robots as beings created solely to serve humans.

AI’s Cultural Influence: Asimov and Adams Shape Perception

Science fiction authors significantly influenced how society perceived artificial intelligence. Isaac Asimov introduced the influential “Three Laws of Robotics,” a framework designed to ensure ethical robot behavior. These laws have profoundly shaped both public opinion and scientific discourse on safely integrating intelligent machines into daily life.

In contrast, Douglas Adams presented AI in a more whimsical yet thought-provoking manner. His famous work, The Hitchhiker’s Guide to the Galaxy, featured Marvin, a chronically depressed yet humorous robot. Adams infused Marvin with exaggeratedly human emotions, highlighting both the absurdity and the complexities of endowing artificial intelligence with personality and emotional depth.

These detailed fictional portrayals by Asimov and Adams not only sparked public curiosity but directly influenced real-world AI development by encouraging researchers to think deeply about the ethical and emotional implications of their creations.

The Birth of Artificial Intelligence: From Turing’s Test to Early Limitations

The modern journey toward artificial intelligence began formally in the 1950s, marked by groundbreaking theories, ambitious visions, and the first practical experiments. Visionaries like Alan Turing and John McCarthy laid the conceptual foundations for machines capable of thinking, but early attempts quickly ran into significant practical limitations. Understanding these early challenges offers valuable insights into how far AI has come today.

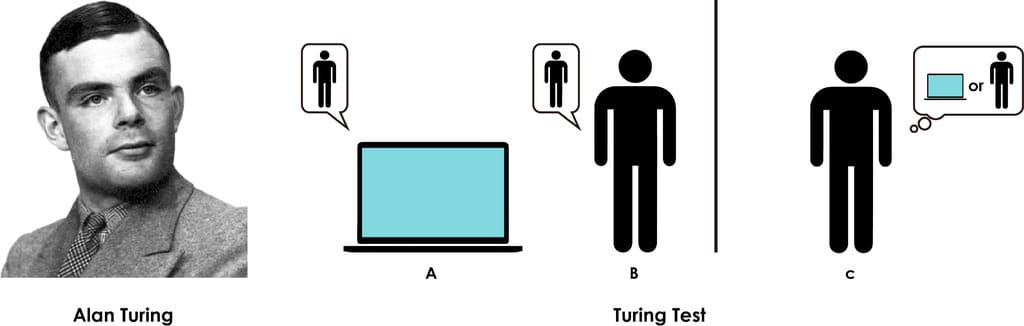

Alan Turing and the Test for Thinking Machines

The modern journey toward artificial intelligence began formally in the 1950s with Alan Turing, the brilliant British mathematician—and notably, a Go enthusiast. Turing introduced the concept of artificial intelligence in his seminal 1950 paper, where he proposed a method to determine if machines could truly think, famously known as the Turing Test.

The idea behind the Turing Test was both elegant and simple: imagine having conversations simultaneously with a human and a machine without knowing who is who. If, after prolonged interaction, you cannot reliably distinguish the machine from the human, the machine would pass the test, demonstrating genuine intelligent behavior. However, back when Turing proposed this groundbreaking experiment, no computer had yet come close to taking on this challenge.

John McCarthy and the Birth of “Artificial Intelligence”

Just six years later, in 1956, American computer scientist John McCarthy organized a landmark event known as the Dartmouth Conference. It was here that the term “Artificial Intelligence” (AI) was officially coined. McCarthy presented an ambitious vision, stating that any feature of human intelligence—including reasoning, problem-solving, and even creativity—could eventually be so precisely described that a computer could simulate it. It was a bold statement, setting the stage for decades of AI research.

Early AI Programs and Their Limitations

The optimism generated by the Dartmouth Conference led to significant early advancements, most notably the development of pioneering programs such as the Logic Theorist and the General Problem Solver. These early AI systems aimed to demonstrate human-like reasoning capabilities by solving puzzles, mathematical theorems, and structured problems. However, their abilities were constrained significantly. They followed strictly defined rules and lacked adaptability. If presented with slightly altered or unfamiliar problems, these early systems simply failed.

Moreover, early AI researchers faced immense challenges due to limited computational power. The computers available at the time were costly and insufficiently powerful, unable to handle the vast amounts of data or complex calculations needed for true intelligence. These constraints severely hampered researchers’ ambitions, ultimately leading to disappointment, reduced funding, and a prolonged stagnation in AI development—an era known historically as the AI winter.

AI Spring: Backpropagation, Reinforcement Learning, and Chess Victories

After the early excitement about AI faded in the 1970s, enthusiasm revived again in the 1980s and ’90s thanks to new algorithms and improved computing power. Researchers began developing innovative methods that allowed artificial intelligence to tackle more complex tasks, rekindling hopes of machines eventually reaching human-level intelligence.

Reinforcement Learning: The Case of MENACE

Around this time, another impactful learning method emerged: reinforcement learning. A vivid example of this was MENACE—the “Matchbox Educable Noughts And Crosses Engine,” essentially a tic-tac-toe-playing AI made entirely from matchboxes filled with beads.

Each matchbox represented a unique game position. To decide the next move, you’d open the appropriate matchbox and randomly pick a bead, with each bead’s color representing a specific move. If MENACE lost a game, you’d penalize it by removing beads corresponding to losing moves. Conversely, when MENACE won, you’d reward it by adding extra beads of the winning color. Gradually, MENACE learned optimal strategies by repeating this cycle of reward and penalty, eventually becoming skilled enough to beat even its creator.

Backpropagation: Teaching Machines to Learn

A critical breakthrough during this resurgence was backpropagation, a method inspired by how teachers guide their students. Imagine you’re teaching someone to bake a cake: you know exactly what a perfect cake should taste like, but your student doesn’t. They mix flour and water and proudly present it to you, thinking they’ve baked a cake. You try it and say, “No, that’s not right. You need sugar, eggs, and butter.” The student adjusts accordingly and tries again. You continue tasting, pointing out mistakes—maybe adding cinnamon, perhaps some sugar, or removing excess salt. After numerous attempts, the student finally masters baking the cake you’ve envisioned.

In backpropagation, the “student” is a neural network, and each baking attempt represents the network making predictions. The corrections you provide are adjustments made to the network’s internal parameters, gradually refining its accuracy until it consistently produces correct outputs.

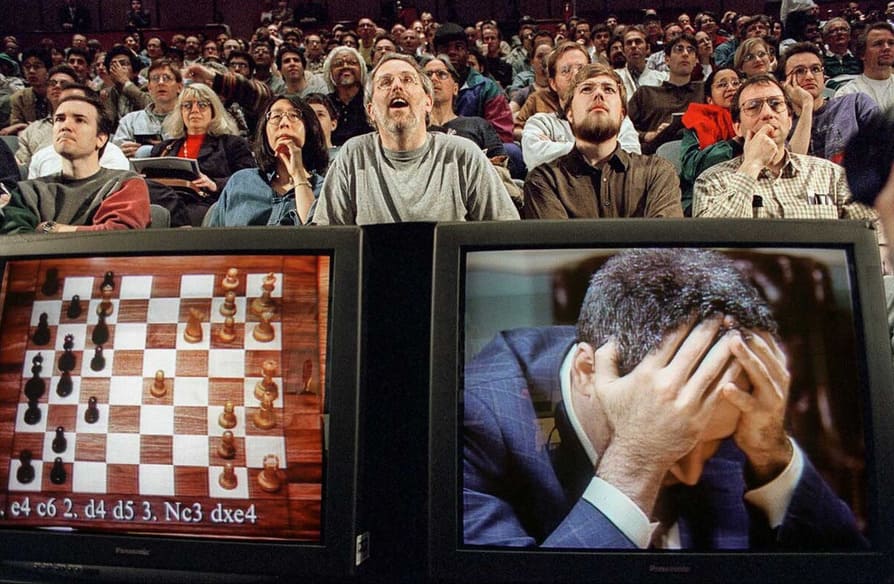

AI Takes on Chess: Deep Blue’s Triumph

These improved learning techniques culminated in a historic event in 1997, when IBM’s Deep Blue defeated world chess champion Garry Kasparov—a landmark achievement in AI history. Deep Blue’s victory wasn’t due to creativity or human-like intuition but rather a massive computational effort. Equipped with vast databases of opening and endgame strategies, the machine relied on its raw computational power and programmed chess knowledge to analyze countless positions rapidly.

Although Deep Blue couldn’t truly “learn” from its games, its victory over Kasparov demonstrated for the first time that machines could outperform even world-class human intellect in highly strategic domains. This achievement energized further AI research, suggesting the enormous potential hidden within artificial intelligence technology.

Big Data, Deep Learning, and the Game of Go

By the early 2000s, the explosive growth of the internet and digital technology ushered in the era known as Big Data. Suddenly, vast quantities of information became readily accessible, enabling artificial intelligence systems to process, analyze, and learn from massive datasets. With more data at their disposal, AI algorithms dramatically improved their ability to recognize patterns, predict outcomes, and tackle increasingly complex real-world challenges.

However, despite remarkable computational advancements, some tasks remained beyond AI’s reach due to their inherent complexity. One such task was mastering the ancient board game Go—a game of profound subtlety that challenged AI researchers for decades.

Why the Game of Go Became AI’s Ultimate Challenge

Go is deceptively simple in appearance, played with black and white stones on a 19-by-19 grid. Yet beneath this simplicity lies immense strategic depth and complexity. Each move opens nearly limitless possibilities: with every turn, the number of potential moves grows exponentially, rapidly exceeding what even the most powerful computers could calculate directly. Unlike chess, where AI could rely heavily on brute-force calculation, Go required genuine intuition, pattern recognition, and an ability to grasp the “big picture.”

Additionally, Go’s objective posed unique challenges to early AI systems. Victory in Go isn’t determined solely by capturing the opponent’s stones—it’s achieved by surrounding territory. Moves played in one area of the board often ripple across distant parts in unpredictable ways, creating intricate strategic relationships and subtle interdependencies. Humans can intuitively grasp these complexities, but teaching machines to recognize and leverage them proved extremely challenging for decades.

AlphaGo and the Revolution of Deep Learning

This seemingly insurmountable barrier was finally overcome in 2016 when Google’s DeepMind created AlphaGo, a revolutionary deep-learning AI. Unlike previous systems, AlphaGo combined advanced neural networks capable of independently recognizing complex patterns and making intuitive judgments. Initially trained on historical human games, AlphaGo rapidly moved beyond human wisdom, improving dramatically through reinforcement learning—playing millions of practice matches against itself.

Instead of being confined to predefined strategies, AlphaGo experimented freely, discovering entirely new moves and strategies never seen in centuries of human play. In 2016, AlphaGo decisively defeated Lee Sedol, one of the world’s strongest Go players, stunning both AI researchers and professional Go communities worldwide. This victory wasn’t merely symbolic; it demonstrated that AI could reach—and surpass—human intuition in tasks previously considered uniquely human.

AlphaGo’s triumph marked a turning point, proving that deep learning algorithms could efficiently identify complex patterns, develop intuitive strategies, and apply their insights creatively to real-world scenarios.

From AlphaGo to ChatGPT: Modern AI’s Ingredients for Success

The success of AlphaGo paved the way for even more ambitious AI systems, including ChatGPT, a sophisticated conversational AI by OpenAI. ChatGPT represents the convergence of multiple AI breakthroughs: neural networks, deep learning, backpropagation, reinforcement learning, and—crucially—the transformer architecture.

The transformer architecture, introduced in 2017, allowed AI to deeply understand context, language nuances, and relationships within vast datasets. Imagine a skilled orchestra conductor with absolute pitch, clearly hearing each instrument in an orchestra and harmonizing them flawlessly. Transformers gave AI a similar ability—processing immense data sets to understand context, language nuances, and relationships between ideas.

Reinforcement learning, first exemplified by early systems like MENACE, taught ChatGPT through extensive trial-and-error, continuously refining its responses based on human feedback. Backpropagation optimized its neural networks, ensuring each conversational interaction improved future accuracy. Combined with immense data from the internet, these technologies created a model capable of natural, nuanced interactions, capturing attention worldwide for its human-like understanding and versatility.

Today, AI built upon these groundbreaking developments has become increasingly accessible, transforming from abstract dreams and theoretical experiments into a practical, everyday technology—intelligence available at our fingertips.

Explore Further: The Magic of Go and Beyond

If you enjoyed this exploration into the fascinating journey of artificial intelligence—and you’re intrigued by the role the ancient game of Go has played in shaping modern technology—there’s a lot more to discover.

Our platform, Go Magic, offers a rich library of insightful articles dedicated to Go, its deep connections with technology, strategy, and philosophy. Dive deeper into a game that not only inspired groundbreaking AI developments but continues to captivate millions worldwide with its profound simplicity and endless complexity.

Discover the world through the lens of Go:

👉 Explore Articles on Go Magic

Your next move might surprise you.

Leave a comment